Digital Emotions - Multimodal Affective AI Engine for Emotion Analysis in Natural Data

Welcome to the Digital Emotions' demo site.

Pre-existing emotion analysis to date is limited in three important ways. First, relatively simple conceptual consideration of the focal emotion construct, such as focusing on positive vs negative, happy vs not happy, sad vs not sad, represents a fundamental limitation that considers only the categorical feature of emotions, but not the dimensionality and intensity of emotions. Second, the existing approaches have been dominated by the use and reliance on a single modality, i.e., visual or facial expression-based features. Third, existing systems tend to focus on monolingual speech, assuming the speaker only communicates in English. Therefore, such systems are inevitably limited in the language-inherent Western cultural norm, lens and perspectives.

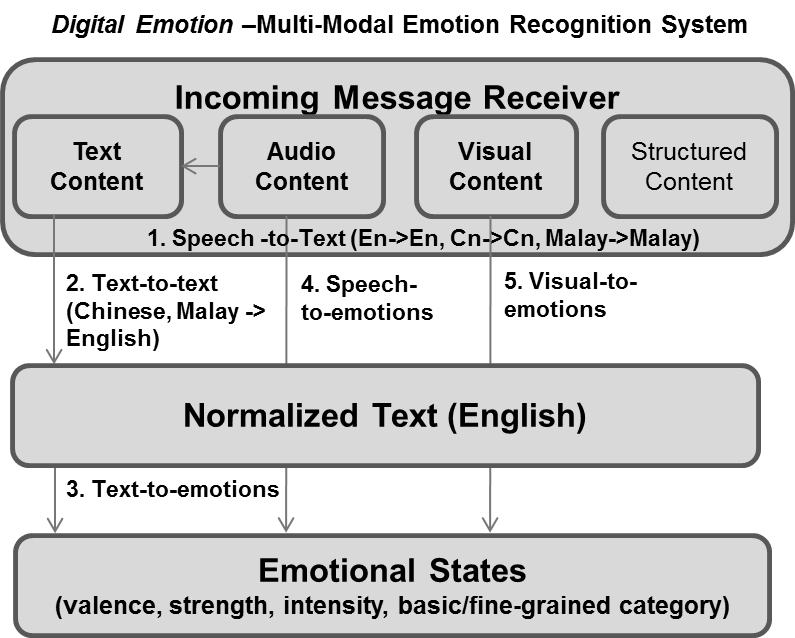

The Digital Emotions technology is an integrative, multimodal, and systematic approach for analysing emotional expressions using features from facial expressions, acoustic signals, and linguistic content in a natural video.

Our research and technological innovation stress upon three key design considerations: 1) a multi-theoretic ground on emotion concepts, 2) the need for sociocultural-level analysis and understanding of emotions, and 3) a strong emphasis on systematically proven pre-trained technologies as platform components. These design considerations significantly differentiate our efforts from existing approaches.

The system presents the following three main user benefits.

1) Able to take multimodal data inputs: Text (speech transcripts, tweets, comments etc. in .csv format), Audio/speech (.wav or .mp4), Video (.mp4)

2) Able to analyse and produce multidimensional emotion outputs: The output variables are the algorithms’ predicted emotional expressions by the emotion types (fear, anger, sadness, joy etc.), emotion dimensions (valence, arousal) as well as emotion intensity (from 0 barely noticeable to 1 extremely intense)

3) Able to support three main speaker languages: English (ready), Chinese (ready), Malay (preliminary)

The Digital Emotions platform technology is enabled through a multi-RI collaborative project spearheaded by A*STAR’s Science and Research Engineering Council, Enterprise and subsequently supported by A*STAR's A*ccelerate Gap Fund. The research team comprises of scientists and engineers from A*STAR’s Institute of High Performance Computing (IHPC), A*STAR’s Institute for Infocomm Research (I2R), and the University of Illinois’ Advanced Digital Sciences Center in Singapore (ADSC).