“Communication is 93% nonverbal” is a widely misused myth: Real-world communication is multimodal and dynamic

“Communication is 93% nonverbal” is widely cited and used in practical applications such as professional training. However, on top of several limitations arising from the original study (Mehrabian and Ferris, 1967), a key limitation is that the experiment only considered having a speaker expressing one single word, “Maybe”, in positive, negative and neutral tones and facial expressions.

The communication literature has already “debunked” this myth: real-world communication is far complex than relying on non-verbal cues including the language content (see Lapakko 1997). In addition, there is also a predominance of the visual paradigm where most emotion analysis software only analyse facial expressions (see Barrett et al. 2019 for a review).

Our innovation and approach

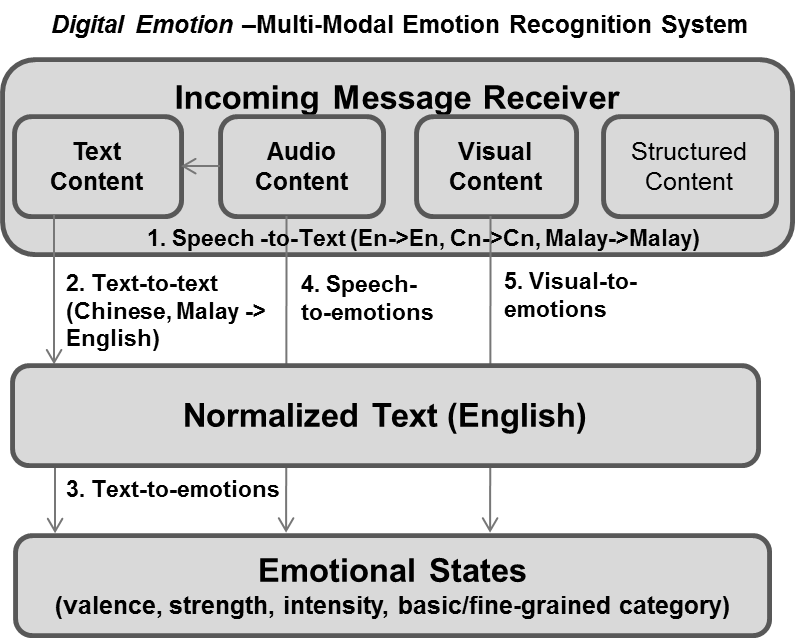

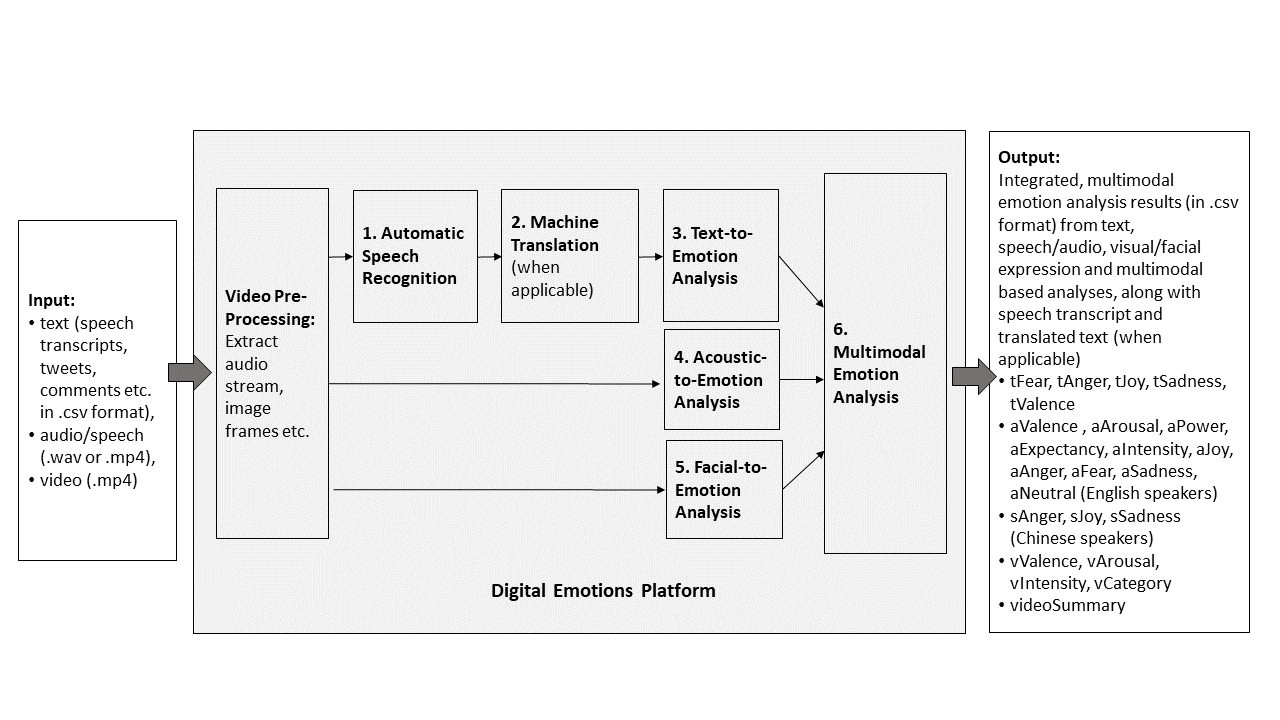

The key motivation that drives Project Digital Emotion centres on the kernel design theory that in the real world, emotional communication is a multidimensional, multimodal and dynamic function that varies upon a diverse range of extrinsic and intrinsic contextual factors. It is believed that multimodal AI capabilities can enable more flexible and holistic applications and use cases when used appropriately.

To enable effective systematic analysis, the technology uses five pre-trained emotion features extraction engines and pre-processing engines, which collectively serve as modular, independent components to pre-process and extract features for the use of the multimodal layer. These source components can be flexibly selected and turned on for a given application scenario. To generate the open-domain multimodal analysis summary as a base platform demonstrator, we pre-trained machine learning-based algorithms using the three above-mentioned modality-specific features from text, speech, and facial expression-based features manually labelled public video clips. This multimodal component is also re-trainable and customisable given application domain-specific needs and training data.

Emotion features extraction

Multidimensional Emotion Intensity Analysis from Natural Language (CrystalFeel)

Dimensional and Categories Emotion Recognition (AcousEmo)

Facial Expression Analysis (FEA)

Pre-processing

Video Pre-processing

English / Chinese / Malay Automatic Speech Recognition (ASR)

Chinese / Malay to English Machine Translation (MT)

Multimodal emotion analysis

Multimodal emotion analysis in open-domain videos

System availability

Digital Emotions is currently available as Docker image. The minimum system requirements to deploy and run the docker are as follows:

72 GB RAM

4-core CPU

Docker environment

The operation does not require an Internet connection

The multidimensional emotion concepts supported

The Digital Emotions system generates emotion analysis results based on seven emotion-related concepts: fear, anger, joy, sadness, valence, arousal, and emotion intensity.

While the Digital Emotions’ algorithms independently compute the various emotion output dimensions, users may find it helpful to consider primary emotions in an order: fear, anger, joy, and sadness. This order is consistent with Robert Plutchik’s psychoevolutionary theory of emotion (Plutchik, 1991).

Fear refers to an unpleasant emotion arising from a perceived threat, danger, pain, or harm. Fear often leads to a confrontation with or escape from the threat, and sometimes freezing or paralysis in extreme events.

In Digital Emotions, the conceptual meaning of fear is consistent with Plutchik’s psychoevolutional theory of emotions (Plutchik, 1980; Plutchik, 1991, pp. 72-78). Fear may range from low-intensity worry to high-intensity terror.

Fear is perhaps the most frequently discussed emotion type in the literature, plausibly due to its functions from the psychoevolutional perspective. Fear’s synonyms may include apprehension, anxiety, worry, scared, dread, horror, and terror (Ortony et al., 1998).

Anger is an unpleasant emotion involving a strong, uncomfortable, and hostile response to a perceived provocation, hurt, or threat. Anger usually has many physical and mental consequences.

In Digital Emotions, the conceptual meaning of anger is consistent with Plutchik’s psychoevolutional theory of emotions (Plutchik, 1980; Plutchik, 1991, pp. 78-84). Anger may range from low-intensity annoyance to high-intensity rage.

The anger emotions in Digital Emotions may correspond to a group of four fine-grained emotions discussed in the OCC’s appraisal theory of emotions, including resentment (or envy, jealousy), reproach (or contempt), anger, and disliking (or disgust, hate) (Ortony et al., 1988).

Joy refers to a positive emotion of gladness, delight, or exultation of the spirit arising from a sense of well-belling or satisfaction. In English, joy may refer to a particular feeling of “great happiness”.

In Digital Emotions, the conceptual meaning of joy is consistent with Plutchik’s psychoevolutional theory of emotions (Plutchik, 1980; Plutchik, 1991, pp. 85-91). Joy may range from low-intensity contentment to high-intensity elation.

Note that the joy emotions in Digital Emotions may correspond to a very comprehensive group of eleven fine-grained positively valenced emotions discussed in the OCC’s appraisal theory of emotions, including joy (happiness, elation), happy-for, gloating, hope (anticipation, optimistic), satisfaction, relief, pride, appreciation, admiration, gratitude (thankfulness, appreciation), gratification (self-satisfaction, smug, pleased-with-oneself), and liking (affection, attracted-to, love) (Ortony et al., 1988).

Sadness is an unpleasant emotion characterized by feelings of loss, disadvantage, helplessness, disappointment, sorrow, and despair. Sadness may often lead to silence, inaction, withdrawal from others, and in extreme cases, depression.

In Digital Emotions, the conceptual meaning of sadness is consistent with Plutchik’s psychoevolutional theory of emotions (Plutchik, 1980; Plutchik, 1991, pp. 91-95 on “grief”). Sadness may range from low-intensity distress to high-intensity grief.

The sadness emotions in Digital Emotions may correspond to a very comprehensive group of six fine-grained emotions discussed in the OCC’s appraisal theory of emotions, including distress (unhappy, sad, grief), sorry-for (pity), fears-confirmed, disappointment, shame (self-reproach, guilt, embarrassment), and remorse (self-anger) (Ortony et al., 1988).

Valence, in affective sciences, refers to the degree of the pleasantness of a feeling or an emotion (Russel, 1980).

Valence is often used interchangeably with “sentiment”, though they are conceptually different. “Sentiment”, strictly speaking, does not need to involve emotions per se. For example, “this product is very useful and easy to use”, does not involve Emotion, but the expressed sentiment is positive.

Arousal, in affective sciences, refers to the degree of physiological activity (e.g., heightened voice, facial expressions, body movements) associated with a feeling or an emotion (Russel, 1980).

The intensity of an emotion refers to the degree of an emotional experience, which can range from not at all, barely noticeable, to highly intense (Frijda,1986; Frijda et al., 1992; Ortony et al., 1988).

Emotion intensity can be considered a variable measuring the “depth” of the emotion.

Related publications

The following provides references related to Digital Emotions’ development, based on the chronological order of publication.

Studies [6, 1, 7] present the techniques development and evaluation pertinent to the core visual, speech and text-based emotion analysis technologies, respectively. Studies [2, 10] pertain to the capability of machine translation. Studies [5, 8, 9] illustrate the techniques for the automatic speech processing capability. Studies [3, 11] present the line of work related to multimodal systems and empirical analysis presenting the value of the multomodal systems.

[1] Poon-Feng, K., Huang, D-Y., Dong, M., and Li, H. (2014). Acoustic emotion recognition based on fusion of multiple features-dependent Deep Boltzmann Machines, ISCSLP 2014

[2] Wu, K., Wang, X., Zhou, N., Aw, A.T., Li, H. (2015). Joint Chinese word segmentation and punctuation prediction using deep recurrent neural network for social media data. IALP2015

[3] Gupta, R.K., and Yang, Y. (2016). Leveraging multi-modal analyses and online knowledge base for video aboutness generation, ISVC2016

[4] Gupta, R.K., and Yang, Y. (2017). CrystalNest at SemEval-2017 Task 4: Using sarcasm detection for enhancing sentiment classification and quantification, SemEval 2017

[5] Do, V.H., Chen, N.F., Lim, B.P., Hasegawa-Johnson, Mu. (2017). Multi-task learning using mismatched transcription for under-resourced speech recognition, INTERSPEECH 2017

[6] Vonikakis, V., Subramanian, R., Arnfred, J., Winkler, S. (2017). A probabilistic approach to people-centric photo selection and sequencing. IEEE Transactions on Multimedia, 2017

[7] Gupta, R.K., and Yang, Y. (2018). CrystalFeel at SemEval-2018 Task 1: Understanding and detecting intensity of emotions using affective lexicons, SemEval 2018

[8] Nguyen, M., Ngo, G.H., Chen, N. (2018). Multimodal neural pronunciation modeling for spoken languages with logographic origin, EMNLP 2018

[9] Ngo, G.H., Nguyen, M., Chen, N. (2018). Phonology-augmented statistical framework for machine transliteration using limited linguistic resources, IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2019

[10] Si, C., Wu, K., Aw, A.T., Kan, M-Y. (2019). Sentiment aware neural machine translation. Proceedings of the 6th Workshop on Asian Translation, 2019

[11] Bhattacharya, P., Gupta, R.K., and Yang, Y. (2021). Exploring the contextual factors affecting multimodal emotion recognition in videos, IEEE Transactions on Affective Computing, 2021, in press

While the references help to show the underlying science behind and the experimental processes that illustrate the approach, it is helpful to note that technologies could have evolved and been improved with more advanced components. Therefore, the latest engine does not necessarily follow the descriptions of the published papers.

References and advanced readings

The following provides some helpful references and advanced readings related to Digital Emotions’ psychological and conceptual foundations.

1) Barrett, Adolphs, Marsella, Martinez, & Pollak (2019). Emotional Expressions Reconsidered: Challenges to Inferring Emotion from Human Facial Movements. Psychological Science in the Public Interest, 20(1), 1-68.

2) Clore, G. L., Ortony, A., & Foss, M. A. (1987). The psychological foundations of the affective lexicon. Journal of Personality and Social Psychology, 53(4), pp. 751–766.

3) Ekman, P. (1993). Facial expression and Emotion. American Psychologist, 48(4), 384–392.

4) Gupta, R.K., and Yang, Y. (2019). Predicting and Understanding News Social Popularity with Emotional Salience Features, 27th ACM International Conference on Multimedia 2019 (ACM MM), 21 - 25 Oct 2019, Nice, France.

5) Kramer, A. D., Guillory, J. E., & Hancock, J. T. (2014). Experimental evidence of massive-scale emotional contagion through social networks. Proceedings of the National Academy of Sciences, 111(24), 8788-8790.

6) Frijda, N.H. (1986). The emotions: Studies in Emotion and social interaction. London, England: Cambridge University Press.

7) Frijda, N. H., Ortony, A., Sonnemans, J., & Clore, G. L. (1992). The complexity of intensity: Issues concerning the structure of emotion intensity. In M. S. Clark (Ed.), Review of Personality and Social Psychology, No. 13. Emotion (pp. 60–89). Sage Publications, Inc.

8) Lapakko, D. (1997). Three cheers for language: A closer examination of a widely cited study of nonverbal communication. Communication Education, 46, 63-67.

9) Lerner, J. S., & Keltner, D. (2001). Fear, anger, and risk. Journal of Personality and Social Psychology, 81(1), 146-159.

10) Mehrabian, A. and Ferris, S.R. (1967). Inference of attitudes from nonverbal communication in two channels. Journal of Consulting Psychology, 31(3), 248–252.

11) Ortony, A., Clore, G. L., & Collins, A. (1988). The cognitive structure of emotions. Cambridge University Press.

12) Ortony, A. & Turner, T. J. (1990). What’s basic about basic emotions? Psychological Review, 97, pp. 315-331.

13) Plutchik, R. (1980). A general psychoevolutionary theory of Emotion. In R. Plutchik & H. Kellerman (Eds.), Emotion: Theory, research, and experience: Vol. 1. Theories of emotion (pp. 3-33). New York: Academic.

14) Plutchik, R. (1991). The Emotions: Revised Edition, Random House, Inc.: University Press of America.

15) Russell, J. A. (1980). A circumplex model of affect, Journal of Personality and Social Psychology, 39(6), pp. 1161–1178

16) Shaver, P., Schwartz, J., Kirson, D. & O’Connor, C. (1987). Emotion knowledge: Further exploration of a prototype approach. Journal of Personality and Social Psychology, 52(6), pp. 1061–1086.

17) Sonnemans, J., & Frijda, N. H. (1994). The structure of subjective emotional intensity. Cognition and Emotion, 8(4), pp. 329–350.

18) Sonnemans, J., & Frijda, N. H. (1995). The determinants of subjective emotional intensity. Cognition and Emotion, 9(5), pp. 483–506.